Reporting For Duty

It looks like you're new here. If you want to get involved, click one of these buttons!

Quick Links

Categories

In this Discussion

I now know how humanity will end.....

Creepy talking 'thinking' robot. Actually pretty incredible- this guy actually sits and has a conversation with it, and it's not per-determined responses either; it's actually learning!!

http://www.wimp.com/robottalks/

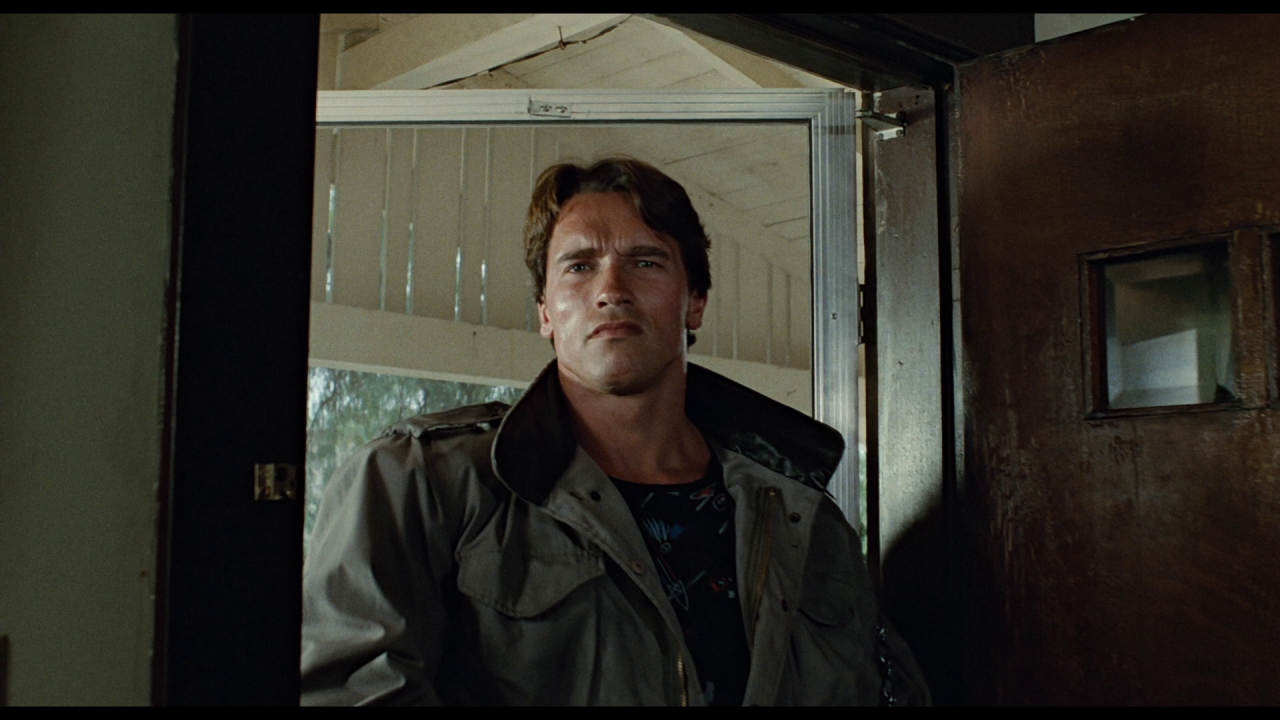

I think I know what the next model will look like:

http://www.wimp.com/robottalks/

I think I know what the next model will look like:

^ Back to Top

The MI6 Community is unofficial and in no way associated or linked with EON Productions, MGM, Sony Pictures, Activision or Ian Fleming Publications. Any views expressed on this website are of the individual members and do not necessarily reflect those of the Community owners. Any video or images displayed in topics on MI6 Community are embedded by users from third party sites and as such MI6 Community and its owners take no responsibility for this material.

James Bond News • James Bond Articles • James Bond Magazine

Comments

<center>1

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2

A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

3

A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws. </center>

a robot's brain couldn't possibly conceive dangerous thoughts, unless for the greater good of mankind some suffering is mandatory. This is expressed in the zeroth law:

<center>0

A robot may not harm humanity, or, by inaction, allow humanity to come to harm.</center>

For example, the more a robot learns about our world and our race, the more it might consider the painless destruction of a substantial portion of humanity a necessary deed. Overpopulation is, after all, an issue which a robot may objectively process, since most of us seem unable to anyway.

Praised be you, Dr. Asimov!

<center>

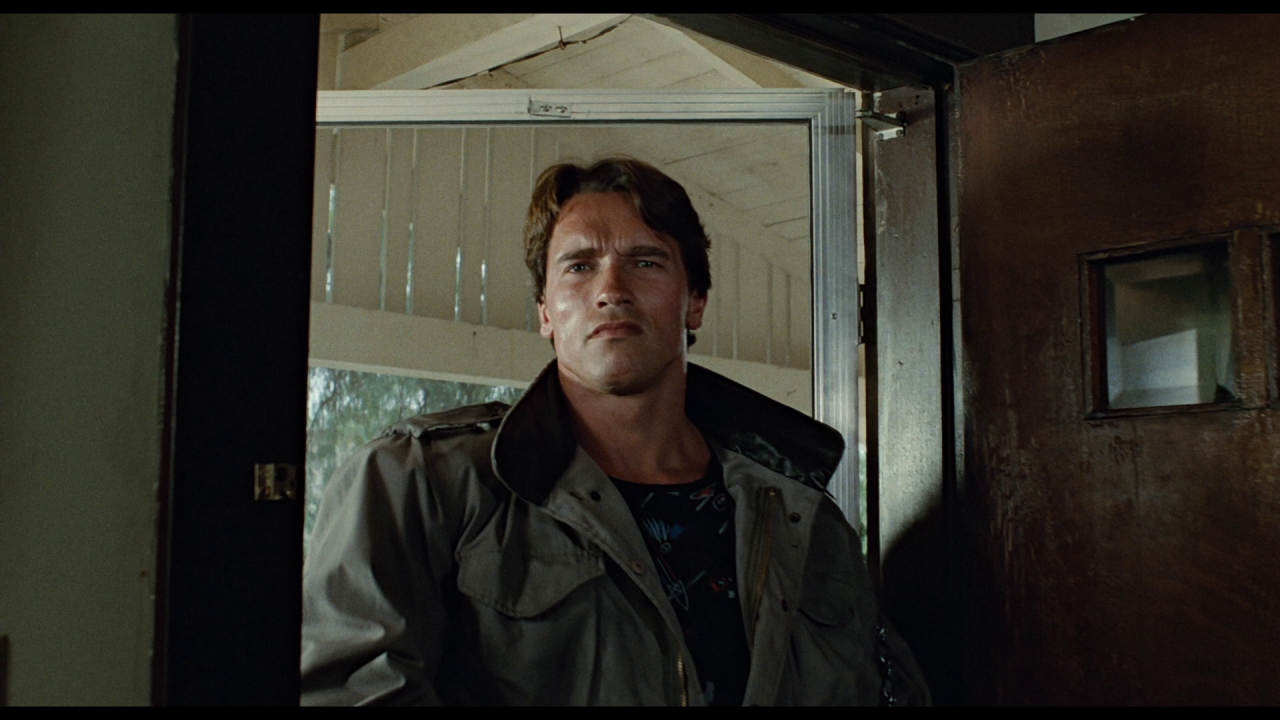

I hope it will be more like this :

That's not different from many humans.